As global temperatures rise, extreme weather events are becoming more intense and more frequent all around the world.

Over the past two decades, the cutting-edge field of extreme weather attribution has sought to establish the role that human-caused warming has played in these events.

There are now hundreds of attribution studies, assessing extremes ranging from heatwaves in China and droughts in Madagascar through to wildfires in Brazil and extreme rainfall in South Africa.

Carbon Brief has mapped every attribution study published to date, revealing that three-quarters of the extremes analysed were made more intense or likely due to climate change.

Along with this explosion of new studies, the different types of attribution studies have evolved and expanded over the past two decades.

For example, the World Weather Attribution service was established in 2015 to provide rapid-response studies, streamlining the process of estimating the human contribution to extreme events in a matter of days.

Meanwhile, a growing community of researchers are developing the “storyline approach” to attribution that focuses more on the dynamics of the specific events being studied.

Other researchers are using weather forecasts to attribute events that have not even happened yet. And many studies are now combining these methods to get the best of all worlds in their findings.

In this detailed Q&A, Carbon Brief explores how the field of attribution science has evolved over time and explains the key methods used today.

- What are the origins of ‘extreme weather attribution’?

- What is ‘probabilistic’ attribution?

- Which weather extremes can scientists link to climate change?

- Why do scientists perform ‘rapid’ attribution studies?

- Can the impacts of extreme weather be linked to climate change?

- How do scientists attribute ‘unprecedented’ events?

- How can weather forecasts be used in attribution studies?

- What are the applications of attribution science?

- What are the next steps for attribution research?

What are the origins of ‘extreme weather attribution’?

The Intergovernmental Panel on Climate Change (IPCC) made its first mention of attribution in its first assessment report (pdf), published in 1990. In a section called “Attribution and the fingerprint method”, the report refers to attribution as “linking cause and effect”.

In these early days of attribution science, experts used statistical methods to search for the “fingerprint” of human-caused climate change in global temperature records.

However, the 1990 report says that “it is not possible at this time to attribute all or even a large part of the observed global mean warming to the enhanced greenhouse effect on the basis of the observational data currently available”.

As the observational record lengthened and scientists refined their methods, experts became more confident about attributing global temperature rise to human-caused climate change. By the time its third assessment report was published in 2001, the IPCC could state that “detection and attribution studies consistently find evidence for an anthropogenic signal in the climate record of the last 35 to 50 years”.

Just two years later, Prof Myles Allen – professor of geosystem science at the University of Oxford – wrote a Nature commentary from his home in Oxford that would open the door for attributing extreme weather events to climate change. The article begins:

“As I write this article in January 2003, the floodwaters of the River Thames are about 30 centimetres from my kitchen door and slowly rising. On the radio, a representative of the UK Met Office has just explained that although this is the kind of phenomenon that global warming might make more frequent, it is impossible to attribute this particular event (floods in southern England) to past emissions of greenhouse gases. What is less clear is whether the attribution of specific weather events to external drivers of climate change will always be impossible in principle, or whether it is simply impossible at present, given our current state of understanding of the climate system.”

Just months after Oxford’s floodwaters began to recede, a now-infamous heatwave swept across Europe. The summer of 2003 was the hottest ever recorded for central and western Europe, with average temperatures in many countries reaching 5C higher than usual.

The unexpected heat resulted in an estimated 20,000 “excess” deaths, making the heatwave one of Europe’s deadliest on record.

In 2004, Allen and two other UK-based climate scientists produced the first formal attribution study, published in Nature, which estimated the impact of human-caused climate change on the heatwave.

To conduct the study, the authors first chose the temperature “threshold” to define their heatwave. They decided on 1.6C above the 1961-90 average, because the European summer of 2003 was the first on record to exceed this average temperature.

They then used a global climate model to simulate two worlds – one mirroring the world as it was in 2003 and the other a fictional world in which the industrial revolution never happened. In the second case, the climate is influenced solely by natural changes, such as solar energy and volcanic activity, and there is no human-caused warming.

The authors ran their models thousands of times in each scenario from 1989 to 2003. As the climate is inherently chaotic, each model “run” – individual simulations of how the climate progresses over many years – produces a slightly different progression of temperatures. This means that some runs simulated a heatwave in the summer of 2003, while others did not.

The authors counted how many times the 1.6C threshold temperature was crossed in the summer of 2003 in each model run. They then compared the likelihood of crossing the threshold temperature in the world with – and a world without – climate change.

They concluded that “it is very likely that human influence has at least doubled the risk of a heatwave exceeding this threshold magnitude”.

A Nature commentary linked to the study called the paper a “breakthrough”, stating that it was the “first successful attempt to detect man-made influence on a specific extreme climatic event”.

In the decade following the heatwave study, more teams from around the world began to use the same methods – known as “probabilistic”, “risk-based” or “unconditional” attribution.

Prof Peter Stott is a science fellow in climate attribution at the UK Met Office and an author on the study. Stott tells Carbon Brief that the basic methods used in this first attribution study are “still used to this day”, but that scientists now use more “up-to-date” climate models than the one used in his seminal study.

What is ‘probabilistic’ attribution?

As the 2004 Nature study demonstrated, probabilistic attribution involves scientists running climate models thousands of times in scenarios with and without human-caused climate change, then comparing the two.

This allows them to say how much more likely, intense or long-lasting an event was due to climate change.

Many studies since have added a third scenario, in which the planet is warmer than present-day temperatures, to assess how climate change may impact extreme weather events in the future.

The figure below shows three distributions of multiple different simulated extreme events. The x-axis (horizontal) represents the intensity of the climate variable – in this instance temperature – with lower temperatures on the left and higher temperatures on the right. The y-axis (vertical) shows the likelihood of this variable hitting certain values.

Each curve shows how the climate variable behaves in a different scenario, or “world”. The red-shaded curve shows a pre-industrial world that was not warmed by human influence, the yellow-shaded curve indicates today’s climate, while the dashed line shows a future, warmer world. The curves shift from left to right as the climate warms.

The peak of each curve shows the most likely temperatures, while likelihood is lowest at the far left and far right of each curve, where temperatures are most extreme. The hatched areas show the temperatures that cross a predefined “threshold” temperature. (In the attribution study on the 2003 European heatwave, this threshold was defined as 1.6C above the 1961-90 average.)

The three curves show how the threshold is more likely to be crossed as the world warms.

Which weather extremes can scientists link to climate change?

In 2011, the American Meteorological Society decided to include a “special supplement” about attribution research in its annual report.

The supplement presented six different attribution studies. It generated significant media interest and the “Explaining Extreme Events” report has been published by Bulletin of the American Meteorological Society almost every year since.

As the research field has grown, so too has the range of different extremes that have been studied.

Heatwaves are generally considered the simplest extreme events to attribute, because they are mainly driven by thermodynamic influences. In contrast, storms and droughts are more strongly affected by complex atmospheric dynamics, so can be trickier to simulate in a model.

The graphic below shows the relative confidence of attributing different types of extreme events.

Attribution studies on extreme heat often assess how much hotter, long-lasting or likely an event was due to climate change. For example, one study finds that the summer heatwave that hit France in 2019 was made 1.5-3C hotter due to climate change and about 100 times more likely.

Heatwaves are the most-studied extreme event in attribution literature, but are becoming “less and less interesting for researchers”, according to a Bloomberg article from 2020.

Assessing extreme rainfall is more complicated – in part because the Earth’s chaotic weather system means that the size and path of a storm or heavy rainfall event has a large element of chance, which can make it challenging to identify where climate change fits in.

Nevertheless, many teams have published studies attributing extreme rainfall events and storms. For example, one study (pdf) found that climate change doubled the likelihood of the intense rainfall that fell in northern China in September 2021.

Scientists also study more complex events, such as drought, wildfires and floods, which are impacted by factors including land use and disaster preparedness.

For example, there are many different ways to define a drought. Some are linked just to rainfall, while others consider factors including soil moisture, groundwater and river flow. Some attribution studies investigating the impact of climate change on drought focus only on rainfall deficit, while others (pdf) study temperature or vapour pressure deficit – the difference between the amount of moisture in the air and how much moisture the air can hold when it is saturated.

A scientist’s decision about which type of drought to study sometimes depends on the available data and the type of impacts caused by the drought. In other cases, the choice may come down to what caused the biggest impact on people.

For example, in late 2022, South America was plagued by a severe drought that caused widespread crop failure. An attribution study on the event, therefore, focused on “agricultural” drought, which captures the response of rainfall on soil moisture conditions and is the most relevant for crop health.

Meanwhile, a study on drought in Madagascar over 2019-21 chose to focus on rainfall deficit. The study says “this was because recent research found rainfall deficits were the primary driver of drought in regions of East Africa with very similar climatic properties to south-west Madagascar”.

Wildfires are affected by conditions including temperature, rainfall, wind speed and land use. While some wildfire attribution studies focus on vapour pressure deficit, others quantify the fire weather index, which looks at the effects of fuel moisture and wind on fire behaviour and spread”.

Tropical cyclones are also complex. There is evidence that climate change can increase the peak “rain rates” and wind speeds of tropical cyclones, and that storm tracks are shifting poleward. There are many aspects of a cyclone that can be analysed, such as rainfall intensity, storm surge height and storm size.

Why do scientists perform ‘rapid’ attribution studies?

As extreme weather attribution became more mainstream, researchers began to produce studies more quickly. However, challenges in communicating the findings of attribution studies in a timely way soon became evident.

After conducting a study, writing it up and submitting it to a journal, it can still take months or years for research to be published. This means that, by the time an attribution study is published, the extreme event has likely long passed.

The World Weather Attribution (WWA) initiative was founded in 2015 to tackle this issue. The team uses a standard, peer-reviewed methodology for their studies, but does not publish the results in formal journals – instead publishing them directly on their website.

(After publishing these “rapid attribution” studies on their website, the team often write full papers for publication in formal journals, which are then peer reviewed.)

This means that rather than taking months or years to publish their research, the team can make their findings public just days or weeks after an extreme weather event occurs.

In 2021, the founders of the initiative – including Carbon Brief contributing editor Dr Friederike Otto, who is a senior lecturer in climate science at Imperial College London’s Grantham Institute – wrote a Carbon Brief guest post explaining why they founded WWA:

“By reacting in a matter of days or weeks, we have been able to inform key audiences with a solid scientific result swiftly after an extreme event has occurred – when the interest is highest and results most relevant.”

The guest post explains that to conduct an attribution study, the WWA team first uses observed data to assess how rare the event is in the current climate – and how much this has changed over the observed record. This is communicated using a “return period” – the expected frequency an event of this magnitude could be expected under a given climate.

For example, the WWA analysed the UK’s record-shattering heatwave of 2022, when the country recorded temperatures above 40C for the first time. They found that the maximum temperature seen in the UK on 19 July 2022 has a 1,000-year return period in today’s climate – meaning that even in today’s climate, 40C heat would only be expected, on average, once in a millennium.

The authors then use climate models to carry out the “probabilistic” attribution study, to determine how much more intense, likely or long-lasting the event was as a result of climate change. They conclude by conducting “vulnerability and exposure” analysis, which often highlights other socioeconomic problems.

Sometimes, the authors conclude that climate change did not influence the event. For example, a 2021 rapid attribution study by WWA found that poverty, poor infrastructure and dependence on rain-fed agriculture were the main drivers of the ongoing food crisis in Madagascar, while climate change played “no more than a small part”.

Other groups are also conducting rapid attribution studies. For example, a group of scientists – including some WWA collaborators – recently launched a “rapid experimental framework” research project called ClimaMeter. The tool provides initial attribution results just hours after an extreme weather event takes place.

ClimaMeter focuses on the atmospheric circulation patterns that cause an extreme event – for example, a low-pressure system in a particular region. Once an event is defined, the scientists search the historical record to find events with similar circulation patterns to calculate how the intensity of the events has changed over time.

Can the impacts of extreme weather be linked to climate change?

A branch of attribution science called “impact attribution” – which aims to quantify the social, economic and/or ecological impacts of climate change on extreme weather events – is also gaining popularity. There are four main types of impact attribution, as shown in the graphic below.

1) Trend-to-trend impact attribution

The first method, called “trend-to-trend” impact attribution, assesses long-term trends in both the climate system and in “health outcomes”. This approach was used in a 2021 study on heat-related mortality around the world, which received extensive media attention.

The authors used data from 732 locations in 43 countries to identify relationships between temperature and mortality in different locations, known as “exposure-response functions”. This allowed them to estimate how many people would die in a given location, if temperatures reach a certain level.

The authors used these relationships to calculate heat-related mortality over 1991-2018 for each location under two scenarios – one with and one without human-caused climate change. The study concluded that 37% of “warm-season heat-related deaths” can be attributed to human-caused climate change.

2) Event-to-event attribution

The second type of study is known as “event-to-event” attribution. In one study using this method, the authors used data on observed mortality rates to determine how many people died in Switzerland during the unusually warm summer of 2022.

They calculated how much climate change contributed to warming during that summer. They then then ran a model to calculate the “hypothetical heat-related burden” that would have been seen during the summer without the warming influence of climate change.

Using this method, they estimate that 60% of the 623 heat-related deaths “could have been avoided in absence of human-induced climate change”.

3) Risk-based event attribution

“Risk-based” event impact attribution – which is demonstrated in a more recent study on the 2003 European heatwave – is the third type of impact attribution. This method combines probabilistic event attribution with resulting health outcomes.

When the paper was published, its lead author, Prof Dann Mitchell – a professor of climate science at the University of Bristol – explained the method to Carbon Brief:

“We have a statistical relationship between the number of additional deaths per degree of warming. This is specific to a certain city and changes a lot between cities. We use climate simulations to calculate the heat in 2003, and in 2003 without human influences. Then we compare the simulations, along with the observations.”

They find, for example, that in the summer of 2003, anthropogenic climate change increased the risk of heat-related mortality in London by around 20%. This means that out of the estimated 315 deaths in London during the heatwave, 64 were due to climate change.

4) Fractional attribution

In the final method, known as “fractional” attribution, the authors combine the results of two independent numbers – an estimation of the total damages caused by an extreme weather event, and a calculation of the proportion of the risk from an extreme weather event for which anthropogenic climate change is responsible, known as the “fraction of attributable risk” (FAR).

The authors of one study used this method to estimate the economic damages linked to Hurricane Harvey.

The authors calculate that “fraction of attributable risk” for the rainfall from Harvey was around three-quarters – meaning that climate change was responsible for three-quarters of the intense rainfall.

Separately, the authors find that according to best estimates, the hurricane caused damages of around US$90bn. From this, the authors conclude that US$67bn of the damages caused by the Hurricane’s intense rainfall can be attributed to climate change.

A study on the 2010 Russian heatwave also used this method. The authors found that the heatwave was responsible for more than 55,000 deaths (pdf), and found an 80% chance that the extreme heat would not have occurred without climate warming. The study concludes that almost 45,000 of the deaths were attributable to human-caused climate change.

However, the fractional attribution method has received criticism. One paper argues that the method “inflates the impacts associated with anthropogenic climate change”, because it “incorrectly assumes” that the event has no impact unless it exceeds the threshold defined by the researchers.

Some of the authors of the Hurricane Harvey paper later wrote a paper advising caution in interpreting the results of FAR studies. They say:

“The fraction of attributable risk (FAR) method, useful in extreme weather attribution research, has a very specific interpretation concerning a class of events, and there is potential to misinterpret results from weather event analyses as being applicable to specific events and their impact outcomes…FAR is not generally appropriate when estimating the magnitude of the anthropogenic signal behind a specific impact.”

Expanding scope

Impact attribution is continuing to expand in scope. For example, studies are now being conducted to assess the impact of climate change on disease transmission.

In 2020, scientists quantified the influence of climate change on specific episodes of extreme ice loss from glaciers for the first time. They found that human-caused climate change made the extreme “mass loss” seen in glaciers in the Southern Alps, New Zealand, in 2018 at least 10 times more likely.

Scientists have also linked climate change to ecosystem shifts. One study focusing on temperature finds that the “extremely early cherry tree flowering” seen in Kyoto in 2021 was made 15 times more likely due to climate change.

Others go even further, linking weather extremes to societal impacts. For example, a 2021 study published in Scientific Reports says:

“By combining an extreme event attribution analysis with a probabilistic model of food production and prices, we find that climate change increased the likelihood of the 2007 co-occurring drought in South Africa and Lesotho, aggravating the food crisis in Lesotho.”

Meanwhile, Imperial College London’s Grantham Institute is working on an initiative to publish rapid impact attribution studies about extreme weather events around the world. Similar to WWA studies, these rapid studies will not be peer reviewed individually, but will be based on a peer-reviewed methodology.

Dr Emily Theokritoff – a research associate at Grantham, who is working on the initiative, tells Carbon Brief that it will be launched “in the near future”. She adds:

“The aim is to recharge the field, start a conversation about climate losses and damages, and help people understand how climate change is making life more dangerous and more expensive.”

How do scientists attribute ‘unprecedented’ events?

An attribution method known as the “storyline approach” or “conditional attribution” has become increasingly popular over the past decade – despite initially causing controversy in the attribution community.

In this approach, researchers first select an extreme weather event, such as a specific heatwave, storm or drought. They then identify the physical components, such as sea surface temperature, soil moisture and atmospheric dynamics, that led to the event unfolding in the way it did. This series of events is called a “storyline”.

The authors then use models to simulate this “storyline” in two different worlds – one in the world as we know it and one in a counterfactual world – for example, with a different sea surface temperature or CO2 level. By comparing the model runs, the researchers can draw conclusions about how much climate change influenced that event.

The storyline approach is useful for explaining the influence of climate change on the physical processes that contributed to the event. It can also be used to explore in detail how this event would have played out in a warmer (future) or cooler (pre-industrial) climate.

One study describes the storyline approach as an “autopsy”, explaining that it “gives an account of the causes of the extreme event”.

Prof Ted Shepherd, a researcher at the University of Reading, was one of the earliest advocates of the storyline attribution approach. At the EGU general assembly in Vienna in April 2024, Shepherd provided the opening talk in a session on storyline attribution.

He told the packed conference room that the storyline approach was born out of the need for a “forensic” approach to attribution, rather than a “yes/no” approach. He emphasised that extreme weather events have “multiple causes” and that the storyline approach allows researchers to dissect each of these components.

Dr Linda van Garderen is a postdoctoral researcher at Utrecht University and has carried out multiple studies using the storyline method. She tells Carbon Brief that, while traditional attribution typically investigates probability, the storyline approach analyses intensity.

For example, she led an attribution study using the storyline method which concluded that the 2003 European and 2010 Russian heatwaves would have been 2.5-4C cooler in a world without climate change.

She adds that it can make communication easier, telling Carbon Brief that “probabilities can be challenging to interpret in practical daily life, whereas the intensity framing of storyline studies is more intuitive and can make attribution studies easier to understand”.

Dr Nicholas Leach is a researcher at the University of Oxford who has conducted multiple studies using the storyline approach. He tells Carbon Brief that probabilistic attribution often produces “false negatives”, wrongly concluding that climate change did not influence an event.

This is because climate models have “biases and uncertainties” which can lead to “noise” – particularly when it comes to dynamical features such as atmospheric circulation patterns. Probabilistic attribution methods often end up losing the signal of climate change in this noise, he explains.

The storyline approach is able to avoid these issues more easily, he says. He explains that by focusing on the dynamics of one specific event, rather than a “broad class of events”, storyline studies can eliminate some of this noise, making it more straightforward to identify a signal, he says.

Conversely, others have critiqued the storyline method for producing false positives, which wrongly claim that climate change influenced an extreme weather event.

The storyline approach has also been praised for its ability to attribute “unprecedented” events. In the EGU session on the storyline method, many presentations explored how the storyline method could be used to attribute “statistically impossible” extremes.

Leach explains that when a completely unprecedented extreme event occurs, statistical models often indicate that the event “shouldn’t have happened”. When running a probabilistic analysis using these models, Leach explains: “You end up with the present probability being zero and past probability being zero, so you can’t say a lot.”

He points to the Pacific north-west heatwave of 2021 as an example of this. This event was one of the most extreme regional heat events ever recorded globally, breaking some local high temperature records by more than 6C.

WWA conducted a rapid attribution study on the heatwave, using its probabilistic attribution method. The heatwave was “so extreme” that the observed temperatures “lie far outside the range” of historical observations, the researchers said.

Their assessment suggests that the heatwave was around a one-in-1,000-year event in today’s climate and was made at least 150-times more likely because of climate change.

Leach and his colleagues used the storyline method to attribute the same heatwave. The methods of this study will be discussed more in the following section.

Leach explains that using the storyline approach, he was able to consider the physics of the event, including an atmospheric river that coincided with the “heat dome” that was a key feature of the event. This helped him to represent the event well in his models. The study concluded that the heatwave was 1.3C hotter and eight times more likely as a result of climate change.

Many experts tell Carbon Brief there was initially tension in the attribution community between probabilistic and storyline advocates when the latter was first introduced. However, as the storyline method has become more mainstream, criticism has abated and many scientists are now publishing research using both techniques.

Van Garderen tells Carbon Brief that storyline attribution is “adding to the attribution toolbox”, rather than attempting to replace existing methods. She emphasises that probability-based and storyline attribution answer different questions, and that both are important.

How can weather forecasts be used in attribution studies?

Forecast attribution is the most recent major addition to the attribution toolbox. This method uses weather forecasts instead of climate models to carry out attribution studies. Many experts describe this method as sitting part-way between probabilistic and storyline attribution.

One benefit of using forecasts, rather than climate models, is that their higher resolution allows them to simulate extreme weather events in more detail. By using forecasts, scientists can also attribute events that have not yet happened.

The first use of “advance forecasted” attribution analysis (pdf) quantified the impact of climate change on the size, rainfall and intensity of Hurricane Florence before it made landfall in North Carolina in September 2018.

The authors, in essence, carried out the probabilistic attribution method, using two sets of short-term forecasts for the hurricane rather than large-scale climate models. The analysis received a mixed reaction. Stott told Carbon Brief at the time that it was “quite a cool idea”, but was highly dependent on being able to forecast such events reliably.

Dr Kevin Trenberth, distinguished senior scientist at the National Center for Atmospheric Research, told Carbon Brief in 2019 that the study was “a bit of a disaster”, explaining that the quality of the forecast was questionable for the assessment.

The authors subsequently published a paper in Science Advances reviewing their study “with the benefit of hindsight”. The authors acknowledged that the results are quite a way off what they forecasted. However, they also claimed to have identified what went wrong with their forecasted analysis.

Problems with the “without climate change” model runs created a larger contrast against their real-world simulations, meaning the analysis overestimated the impact of climate change on the event, they said.

Nonetheless, the study did identify a quantifiable impact of climate change on Hurricane Florence, adding to the evidence from studies by other author groups.

This research team has since published more forecast-based attribution studies on hurricanes. One study used hindcasts – forecasts that start from the past and then run forward into the present – to analyse the 2020 hurricane season. The team then ran a series of “counterfactual” hindcasts over the same period, without the influence of human warming from sea surface temperatures.

They found that warmer waters increased three-hour rainfall rates and three-day accumulated rainfall for tropical storms by 10% and 5%, respectively, over the 2020 season.

Meanwhile, a 2021 study by a different team showed how it was possible to use traditional weather forecasts for attribution. The researchers, who penned a Carbon Brief guest post about their work, found that the European heatwave of February 2019 was 42% more likely for the British Isles and at least 100% more likely for France.

To conduct their study, the authors used a weather forecast model – also known as a “numerical weather prediction” model (NWP).

They explain that a NWP typically runs at a higher resolution than a climate model, meaning that it has more, smaller grid cells. This allows it to simulate processes that a climate model cannot and makes them “more suitable for studying the most extreme events than conventional climate models,” the authors argue.

More recently, Leach and his team carried out a forecast attribution study on the record-breaking Pacific north-west heatwave of 2021, years after the event took place.

The authors defined 29 June 2021 as the start of the event, as this is when the maximum temperature of the heatwave was recorded. They then ran their forecasts using a range of “lead times” – the number of days before the event starts that the model simulation is initialised.

The shortest lead time in this study was three days, meaning the scientists began running the model using the weather conditions recorded on 26 June 2021. The short lead time meant that they could tailor the model very closely to the weather conditions at this time and simulated the event itself very accurately.

By comparison, the longest lead times used in this study were 2-4 months. This means that the models were initialised in spring and, by the time they simulated the June heatwave, their simulation did not closely resemble the events that actually unfolded.

Leach tells Carbon Brief that by lengthening the lead time of the weather forecast, they can effectively “shift the dial” from storyline to probabilistic attribution. He explains:

“If you’re using a forecast that’s initialised really near to your event, then you’re kind of going down that storyline approach, by saying, ‘I want what my model is stimulating to look really similar to the event I’m interested in’…

“The further back [in time] you go, the closer you get to the more probabilistic style of statements that are more unconditioned.”

This combination of storyline and probabilistic attribution allows the authors to draw conclusions both about how climate change affected the intensity and the likelihood of the heatwave. The authors estimate that the heatwave was 1.3C more intense and eight times more likely as a result of climate change.

More recently, Climate Central has produced a tool that uses temperature forecasts over the US over the coming days to calculate a “climate shift index”. This index gives the ratio of how common the forecasted temperature is in today’s climate, compared to how likely it would be in a world without climate change.

The index runs from five to minus five. A result of zero indicates that climate change has no detectable influence, an index of five means that climate change made the temperature at least five times more likely and an index of minus five means that climate change made the temperature at least five times less likely.

The tool can be used for attribution. For example, recent analysis by the group used the index to quantify how climate change has influenced the number of uncomfortably hot nights. It concluded:

“Due to human-caused climate change, 2.4 billion people experienced an average of at least two additional weeks per year where nighttime temperatures exceeded 25C. Over one billion people experienced an average of at least two additional weeks per year of nights above 20C and 18C.”

What are the applications of attribution science?

One often-touted application of attribution studies is to raise awareness about the role of climate change in extreme weather events. However, there are limited studies about how effective this is.

One study presents the results of focus group interviews with UK scientists, who were not working on climate change, in which participants were given attribution statements. The study concludes:

“Extreme event attribution shows significant promise for climate change communication because of its ability to connect novel, attention-grabbing and event-specific scientific information to personal experiences and observations of extreme events.”

However, the study identified a range of challenges, including “adequately capturing nuances”, “expressing scientific uncertainty without undermining accessibility of key findings” and difficulties interpreting mathematical aspects of the results.

In another experiment, researchers informed nearly 4,000 adults in the US that climate change had made the July 2023 heatwave in the US at least five times more likely. The team also shared information from Climate Central’s climate shift index. According to the study, both approaches “increased the belief that climate change made the July 2023 heatwave more likely and is making heatwaves in general more likely as well”.

Meanwhile, as the science of extreme weather attribution becomes more established, lawyers, governments and civil society are finding more uses for this evolving field.

For example, attribution is starting to play an important role in courts. In 2017, two lawyers wrote a Carbon Brief guest post stating “we expect that attribution science will provide crucial evidence that will help courts determine liability for climate change related harm”.

Four years later, the authors of a study on “climate litigation” wrote a Carbon Brief guest post explaining how attribution science can be “translated into legal causality”. They wrote:

“Attribution can bridge the gap identified by judges between a general understanding that human-induced climate change has many negative impacts and providing concrete evidence of the role of climate change at a specific location for a specific extreme event that already has led or will lead to damages.”

In 2024, around 2,000 Swiss women used an attribution study, alongside other evidence, to win a landmark case in the European Court of Human Rights. The women, mostly in their 70s, said that their age and gender made them particularly vulnerable to heatwaves linked to climate change. The court ruled that Switzerland’s efforts to meet its emissions targets had been “woefully inadequate”.

The 2024 European Geosciences Union conference in Vienna dedicated an entire session to climate change and litigation. Prof Wim Thiery – a scientist who was involved in many conference sessions on climate change and litigation – tells Carbon Brief that attribution science is particularly important for supporting “reparation cases”, in which vulnerable countries or communities seek compensation for the damages caused by climate change.

He adds Carbon Brief that seeing the “direct and tangible impact” of an attribution study in a court case “motivates climate scientists in engaging in this community”.

(Other types of science are also important in court cases related to climate change, he added. For example, “source attribution” identifies the relative contribution of different sectors and entities – such as companies or governments – to climate change.)

Dr Rupert Stuart-Smith, a research associate in climate science and the law at the University of Oxford’s Sustainable Law Programme, adds:

“We’re seeing a new evolution whereby communities are increasingly looking at impact-relevant variables. Think about inundated areas, lake levels, heatwave mortalities. These are the new target variables of attribution science. This is a new frontier and we are seeing that those studies are directly usable in court cases.”

He tells Carbon Brief that some cases “have sought to hold high-emitting corporations – such as fossil fuel or agricultural companies – liable for the costs of climate change impacts”. He continues:

“In cases like these, claimants typically need to show that climate change is causing specific harms affecting them and courts may leverage attribution or climate projections to adjudicate these claims. Impact attribution is particularly relevant in this context.”

Dr Delta Merner is a lead scientist at the science hub for climate litigation. She tells Carbon Brief that “enhanced source attribution for companies and countries” will be “critical” for holding major emitters accountable. She adds:

“This is an urgent time for the field of attribution science, which is uniquely capable of providing robust, actionable evidence to inform decision-making and drive accountability.”

Meanwhile, many countries’s national weather services are working on “operational attribution” – the regular production of rapid attribution assessments.

Stott tells Carbon Brief that the UK Met Office is operationalising attribution studies. For example, on 2 January 2024, it announced that 2023 was the second-warmest year on record for the UK, with an average temperature of 9.97C.

New methods are also being developed. For example, groups, such as the “eXtreme events: Artificial Intelligence for Detection and Attribution” (XAIDA) team, are researching the use of machine learning and artificial intelligence for attribution studies.

One recent attribution study uses a machine-learning approach to create “dynamically consistent counterfactual versions of historical extreme events under different levels of global mean temperature”. The authors estimate that the south-central North American heatwave of 2023 was 1.18-1.42C warmer because of global warming.

The authors conclude:

“Our results broadly agree with other attribution techniques, suggesting that machine learning can be used to perform rapid, low-cost attribution of extreme events.”

Other scientists are using a method called UNSEEN, which involves running models thousands of times to increase the size of the datasets used to make it easier to derive accurate probabilities from highly variable extremes.

What are the next steps for attribution research?

The experts that Carbon Brief spoke to for this article have high hopes for the future of attribution science. For example, Stott says:

“Attribution science has great potential to improve the resilience of societies to future climate change, can help monitor progress towards the Paris goals of keeping global warming to well below 2C and can motivate progress in driving down emissions towards net-zero by the middle of this century.”

However, despite the progress made over the past two decades, there are still challenges to overcome. One of the key barriers in attribution science is a lack of high-quality observational data in low-income countries.

To carry out an attribution study, researchers need a Iong, high-quality dataset of observations from the area being studied. However, inadequate funding or political instability means that many developing countries do not have sufficient weather station data.

In a 2016 interview with Carbon Brief, Allen said that “right now there is obviously a bias towards our own backyards – north-west Europe, Australia and New Zealand.”

Many WWA studies in global-south countries mention the challenge of finding adequate data and sometimes this affects the results. A WWA study of the 2022 drought in west Africa’s Sahel region was unable to find the signal of climate change in the region’s rainfall pattern – in part, due to widespread uncertainties in the observational data.

Otto, who was an author on the study, explained at the time:

“It could either be because the data is quite poor or because we have found the wrong indices. Or it could be because there really is no climate change signal…We have no way of identifying which of these three options it is.”

Developing better observational datasets is an ongoing challenge. It is highlighted in much of the literature on attribution as an important next step for attribution science – and for climate science more widely. Merner tells Carbon Brief that scientists also need to work on developing “novel approaches for regions without baseline data”.

Meanwhile, many scientists expect the methods used in attribution science to continue evolving. The Detection and Attribution Model Intercomparison Project is currently collecting simulations, which will support improved attribution of climate change in the next set of assessment reports from the Intergovernmental Panel on Climate Change.

Mitchell says that, over the next decade, he thinks that “we will move away from the more generic attribution methods that have served us well to this point, and start developing and applying more targeted – and even more defensible – methods”.

In particular, he highlights the need for more specific methods for impact attribution – for example, studying the impacts of weather events on health outcomes, biodiversity changes or financial losses.

He continues:

“The interplay of different socioeconomic states and interventions with that of climate change can make these particularly difficult to study – but we are getting there with our more advanced, albeit computationally expensive methods, such as using weather forecast models as the foundation of our attribution statements.”

Stott tells Carbon Brief that incorporating impacts into attribution assessments is a “crucial area for development” in attribution science. He explains that impact attribution is “very relevant to the loss-and-damage agenda and further developments in attribution science are likely to include the ability to attribute the financial costs of storms”.

Stuart-Smith tells Carbon Brief that, “in the coming years, growing numbers of studies will quantify the economic burden of climate change and its effects on a broader range of health impacts, including from vector and water-borne diseases”.

Leach also tells Carbon Brief that it is “important for attribution to move their focus beyond physical studies and into quantitative impact studies to increase their relevance and utility in policy and the media”.

He adds:

“Utilising weather forecasts for attribution would fit neatly with this aim as those same models are already widely used by emergency managers and built into impact modelling frameworks.”

Similarly, Stott tells Carbon Brief that “forecast attribution shows great potential”. He explains that by “progressing that science” will allow this method to be used to attribute more types of extreme weather with greater confidence.

Leach advocates for greater use of weather forecast models for all types of attribution. He says:

“Weather forecast models have demonstrated repeatedly over the past few years that they are capable of accurately representing even unprecedented weather extremes. Using these validated state-of-the-art models for attribution could bring an increase in confidence in the results.”

Many scientists also tell Carbon Brief about the importance of operationalising attribution. The weather services in many countries already have this in place. Stott tells Carbon Brief that groups in Japan, South Korea, Australia and the US are also “at various stages of developing operational attribution services”.

Meanwhile, Otto tells Carbon Brief that “the most important next step for attribution in my view is to really integrate the assessment of vulnerability and exposure into the attribution studies”. She adds:

“In order for attribution to truly inform adaptation it is essential though to go from attributing hazards, as we do now mainly, to disentangling drivers of disasters.”

Mitchell adds that he thinks attribution statements “are absolutely essential for [countries to make] national adaptation plans”.

Meanwhile, another study suggests that extreme event attribution studies could be used by engineers, along with climate projections, to assist climate adaptation for civil infrastructure.

Leach tells Carbon Brief that attribution could be useful in the insurance sector for similar reasons. He adds that many insurance sectors use the same forecasts in their catastrophe models that climate scientists use for forecast attribution, meaning that it should be straightforward to add attribution studies into their pipelines.

The post Q&A: The evolving science of ‘extreme weather attribution’ appeared first on Carbon Brief.

Greenhouse Gases

Q&A: What does Trump’s repeal of US ‘endangerment finding’ mean for climate action?

On 12 February, US president Donald Trump revoked the “endangerment finding”, the bedrock of federal climate policy.

The 2009 finding concluded that six key greenhouse gases, including carbon dioxide (CO2), were a threat to human health – triggering a legal requirement to regulate them.

It has been key to the rollout of policies such as federal emission standards for vehicles, power plants, factories and other sources.

Speaking at the White House, US Environmental Protection Agency (EPA) administrator Lee Zeldin claimed that the “elimination” of the endangerment finding would save “trillions”.

The revocation is expected to face multiple legal challenges, but, if it succeeds, it is expected to have a “sweeping” impact on federal emissions regulations for many years.

Nevertheless, US emissions are expected to continue falling, albeit at a slower pace.

Carbon Brief takes a look at what the endangerment finding was, how it has shaped US climate policy in the past and what its repeal could mean for action in the future.

- What is the ‘endangerment finding’?

- How has it shaped federal climate policy?

- How is the finding being repealed and will it face legal challenge?

- What does this mean for federal efforts to address climate change?

- What has the reaction been?

- What will the repeal mean for US emissions?

What is the ‘endangerment finding’?

The challenges of passing climate legislation in the US have meant that the federal government has often turned instead to regulations – principally, under the 1970 Clean Air Act.

The act requires the EPA to regulate pollutants, if they are found to pose a danger to public health and the environment.

In a 2007 legal case known as Massachusetts vs EPA, the Supreme Court ruled that greenhouse gases qualify as pollutants under the Clean Air Act. It also directed the EPA to determine whether these gases posed a threat to human health.

The 2009 “endangerment finding” was the result of this process and found that greenhouse gas emissions do indeed pose such a threat. Subsequently, it has underpinned federal emissions regulations for more than 15 years.

In developing the endangerment finding, the EPA pulled together evidence from its own experts, the US National Academies of Sciences, Engineering and Medicine and the wider scientific community.

On 7 December 2009, it concluded that US greenhouse gas emissions “in the atmosphere threaten the public health and welfare of current and future generations”.

In particular, the finding highlighted six “well-mixed” greenhouse gases: carbon dioxide (CO2); methane (CH4); nitrous oxide (N2O); hydrofluorocarbons (HFCs); perfluorocarbons (PFCs); and sulfur hexafluoride (SF6).

A second part of the finding stated that new vehicles contribute to the greenhouse gas pollution that endangers public health and welfare, opening the door to these emissions being regulated.

At the time, the EPA noted that, while the finding itself does not impose any requirements on industry or other entities, “this action was a prerequisite for implementing greenhouse gas emissions standards for vehicles and other sectors”.

On 15 December 2009, the finding was published in the federal register – the official record of US federal legislation – and the final rule came into effect on 14 January 2010.

At the time, then-EPA administrator Lisa Jackson said in a statement:

“This finding confirms that greenhouse gas pollution is a serious problem now and for future generations. Fortunately, it follows President [Barack] Obama’s call for a low-carbon economy and strong leadership in Congress on clean energy and climate legislation.

“This pollution problem has a solution – one that will create millions of green jobs and end our country’s dependence on foreign oil.”

How has it shaped federal climate policy?

The endangerment finding originated from a part of the Clean Air Act regulating emissions from new vehicles and so it was first applied in that sector.

However, it came to underpin greenhouse gas emission regulation across a range of sectors.

In May 2010, shortly after the Obama EPA finalised the finding, it was used to set the country’s first-ever limits on greenhouse gas emissions from light-duty engines in motor vehicles.

The following year, the EPA also released emissions standards for heavy-duty vehicles and engines.

However, findings made under one part of the Clean Air Act can also be applied to other articles of the law. David Widawsky, director of the US programme at the World Resources Institute (WRI), tells Carbon Brief:

“You can take that finding – and that scientific basis and evidence – and apply it in other instances where air pollutants are subject or required to be regulated under the Clean Air Act or other statutes.

“Revoking the endangerment finding then creates a thread that can be pulled out of not just vehicles, but a whole lot of other [sources].”

Since being entered into the federal register, the endangerment finding has also been applied to stationary sources of emissions, such as fossil-fuelled power plants and factories, as well as an expanded range of non-stationary emissions sources, including aviation.

(In fact, the EPA is compelled to regulate emissions of a pollutant – such as CO2 as identified in the endangerment finding – from stationary sources, once it has been regulated anywhere else under the Clean Air Act.)

In 2015, the EPA finalised its guidance on regulating emissions from fossil-fuelled power plants. These performance standards applied to newly constructed plants, as well as those that underwent major modifications.

This ruling noted that “because the EPA is not listing a new source category in this rule, the EPA is not required to make a new endangerment finding…in order to establish standards of performance for the CO2”.

The following year, the agency established rules on methane emissions from oil and gas sources, including wells and processing plants. Again, this was based on the 2009 finding.

The 2016 aircraft endangerment finding also explicitly references the vehicle-emissions endangerment finding. That rule says that the “body of scientific evidence amassed in the record for the 2009 endangerment finding also compellingly supports an endangerment finding” for aircraft.

The endangerment finding has also played a critical role in shaping the trajectory of climate litigation in the US.

In a 2011 case, American Electric Power Co. vs Connecticut, the Supreme Court unanimously found that, because greenhouse gas emissions were already regulated by the EPA under the Clean Air Act, companies could not be sued under federal common law over their greenhouse gas emissions.

Widawsky tells Carbon Brief that repealing the endangerment finding therefore “opens the door” to climate litigation of other kinds:

“When plaintiffs would introduce litigation in federal courts, the answer or the courts would find that EPA is ‘handling it’ and there’s not necessarily a basis for federal litigation. By removing the endangerment finding…it actually opens the door to the question – not necessarily successful litigation – and the courts will make that determination.”

How is the finding being repealed and will it face legal challenge?

The official revocation of the endangerment finding is yet to be posted to the federal register. It will be effective 60 days after the text is published in the journal.

It is set to face no shortage of legal challenges. The state of California has “vowed” to sue, as have a number of environmental groups, including Sierra Club, Earthjustice and the National Resources Defense Council.

Dena Adler, an adjunct professor of law at New York University School of Law, tells Carbon Brief there are “significant legal and analytical vulnerabilities” in the EPA’s ruling. She explains:

“This repeal will only stick if it can survive legal challenge in the courts. But it could take months, if not years, to get a final judicial decision.”

At the heart of the federal agency’s argument is that it claims to lack the authority to regulate greenhouse gas emissions in response to “global climate change concerns” under the Clean Air Act.

In the ruling, the EPA says the section of the Act focused on vehicle emissions is “best read” as authorising the agency to regulate air pollution that harms the public through “local or regional exposure” – for instance, smog or acid rain – but not pollution from “well-mixed” greenhouse gases that, it claims, “impact public health and welfare only indirectly”.

This distinction directly contradicts the landmark 2007 Supreme Court decision in Massachusetts vs EPA. (See: What is the ‘endangerment finding’?)

The EPA’s case also rests on an argument that the agency violated the “major questions doctrine” when it started regulating greenhouse gas emissions from vehicles.

This legal principle holds that federal agencies need explicit authorisation from Congress to press ahead with actions in certain “extraordinary” cases.

In a policy brief in January, legal experts from New York University School of Law’s Institute of Policy Integrity argued that the “major questions doctrine” argument “fails for several reasons”.

Regulating greenhouse gas emissions under the Clean Air Act is “neither unheralded nor transformative” – both of which are needed for the legal principle to apply, the lawyers said.

Furthermore, the policy brief noted that – even if the doctrine were triggered – the Clean Air Act does, in fact, supply the EPA with the “clear authority” required.

Mark Drajem, director of public affairs at NRDC, says the endangerment finding has been “firmly established in the courts”. He tells Carbon Brief:

“In 2007, the Supreme Court directed EPA to look at the science and determine if greenhouse gases pose a risk to human health and welfare. EPA did that in 2009 and federal courts rejected a challenge to that in 2012.

“Since then, the Supreme Court has considered EPA’s greenhouse gas regulations three separate times and never questioned whether it has the authority to regulate greenhouse gases. It has only ruled on how it can regulate that pollution.”

However, experts have noted that the Trump administration is banking on legal challenges making their way to the Supreme Court – and the now conservative-leaning bench then upholding the repeal of the endangerment finding.

Elsewhere, the EPA’s new ruling argues that regulating emissions from vehicles has “no material impact on global climate change concerns…much less the adverse public health or welfare impacts attributed to such global climate trends”.

“Climate impact modelling”, it continues, shows that “even the complete elimination of all greenhouse gas emissions” of vehicles in the US would have impacts that fall “within the standard margin of error” for global temperature and sea level rise.

In this context, it argues, regulations on emissions are “futile”.

(The US is more historically responsible for climate change than any other country. In its 2022 sixth assessment report, the Intergovernmental Panel on Climate Change said that further delaying action to cut emissions would “miss a brief and rapidly closing window of opportunity to secure a liveable and sustainable future for all”.)

However, the final rule stops short of attempting to justify the plans by disputing the scientific basis for climate change.

Notably, the EPA has abandoned plans to rely on the findings of a controversial climate science report commissioned by the Department of Energy (DoE) last year.

This is a marked departure from the draft ruling, published in August, which argued there were “significant questions and ambiguities presented by both the observable realities of the past nearly two decades and the recent findings of the scientific community, including those summarised in the draft CWG [‘climate working group’] report”.

The CWG report – written by five researchers known for rejecting the scientific consensus on human influence on global warming – faced significant criticism for inaccurate conclusions and a flawed review process. (Carbon Brief’s factcheck found more than 100 misleading or false statements in the report.)

A judge ruled in January that the DoE had broken the law when energy secretary Chris Wright “hand-picked five researchers who reject the scientific consensus on climate change to work in secret on a sweeping government report on global warming”, according to the New York Times.

In a press release in July, the EPA said “updated studies and information” set out in the CWG report would serve to “challenge the assumptions” of the 2009 finding.

But, in the footnotes to its final ruling, the EPA notes it is not relying on the report for “any aspect of this final action” in light of “concerns raised by some commenters”.

Legal experts have argued that the pivot away from arguments undermining climate science is designed with future legal battles over the attempted repeal in mind.

What does this mean for federal efforts to address climate change?

As mentioned above, a number of groups have already filed legal actions against the Trump administration’s move to repeal the endangerment finding – leaving the future uncertain.

However, if the repeal does survive legal challenges, it would have far-reaching implications for federal efforts to address greenhouse gas emissions, experts say.

In a blog post, the WRI’s Widawsky said that the repeal would have a “sweeping” impact on federal emissions regulations for cars, coal-fired power stations and gas power plants, adding:

“In practical terms, without the endangerment finding, regulating greenhouse gas emissions is no longer a legal requirement. The science hasn’t changed, but the obligation to act on it has been removed.”

Speaking to Carbon Brief, Widawsky adds that, despite this large immediate impact, there are “a lot of mechanisms” future US administrations might be able to pursue if they wanted to reinstate the federal government’s obligation to address greenhouse gas emissions:

“Probably the most direct way – rather than talk about ‘pollutants’, in general, and the EPA, say, making a science-specific finding for that pollutant – [is] for Congress simply to declare a particular pollutant to be a hazard for human health and welfare. [This] has been done in other instances.”

If federal efforts to address greenhouse gas emissions decline, there will likely still be attempts to regulate at the state level.

Previous analysis from the University of Oxford noted that, despite a walkback on federal climate policy in Trump’s second presidential term, 19 US states – covering nearly half of the country’s population – remain committed to net-zero targets.

Widawksy tells Carbon Brief that it is possible that states may be able to leverage legislation, including the Clean Air Act, to enact regulations to address emissions at the state level.

However, in some cases, states may be prevented from doing so by “preemption”, a US legal doctrine where higher-level federal laws override lower-level state laws, he adds:

“There are a whole lot of other sections of the Clean Air Act that may either inhibit that kind of ability for states to act through preemption or allow for that to happen.”

What has the reaction been?

The Trump administration’s decision has received widespread global condemnation, although it has been celebrated by some right-wing newspapers, politicians and commentators.

In the US, former US president Barack Obama said on Twitter that the move will leave Americans “less safe, less healthy and less able to fight climate change – all so the fossil-fuel industry can make even more money”.

Similarly, California governor Gavin Newsom called the decision “reckless”, arguing that it will lead to “more deadly wildfires, more extreme heat deaths, more climate-driven floods and droughts and greater threats to communities nationwide”.

Former US secretary of state and climate envoy John Kerry called the decision “un-American”, according to a story on the frontpage of the Guardian. He continued:

“[It] takes Orwellian governance to new heights and invites enormous damage to people and property around the world.”

An editorial in the Guardian dubbed the repeal as “just one part of Trump’s assault on environmental controls and promotion of fossil fuels”, but added that it “may be his most consequential”.

Similarly, an editorial in the Hindu said that Trump is “trying to turn back the clock on environmental issues”.

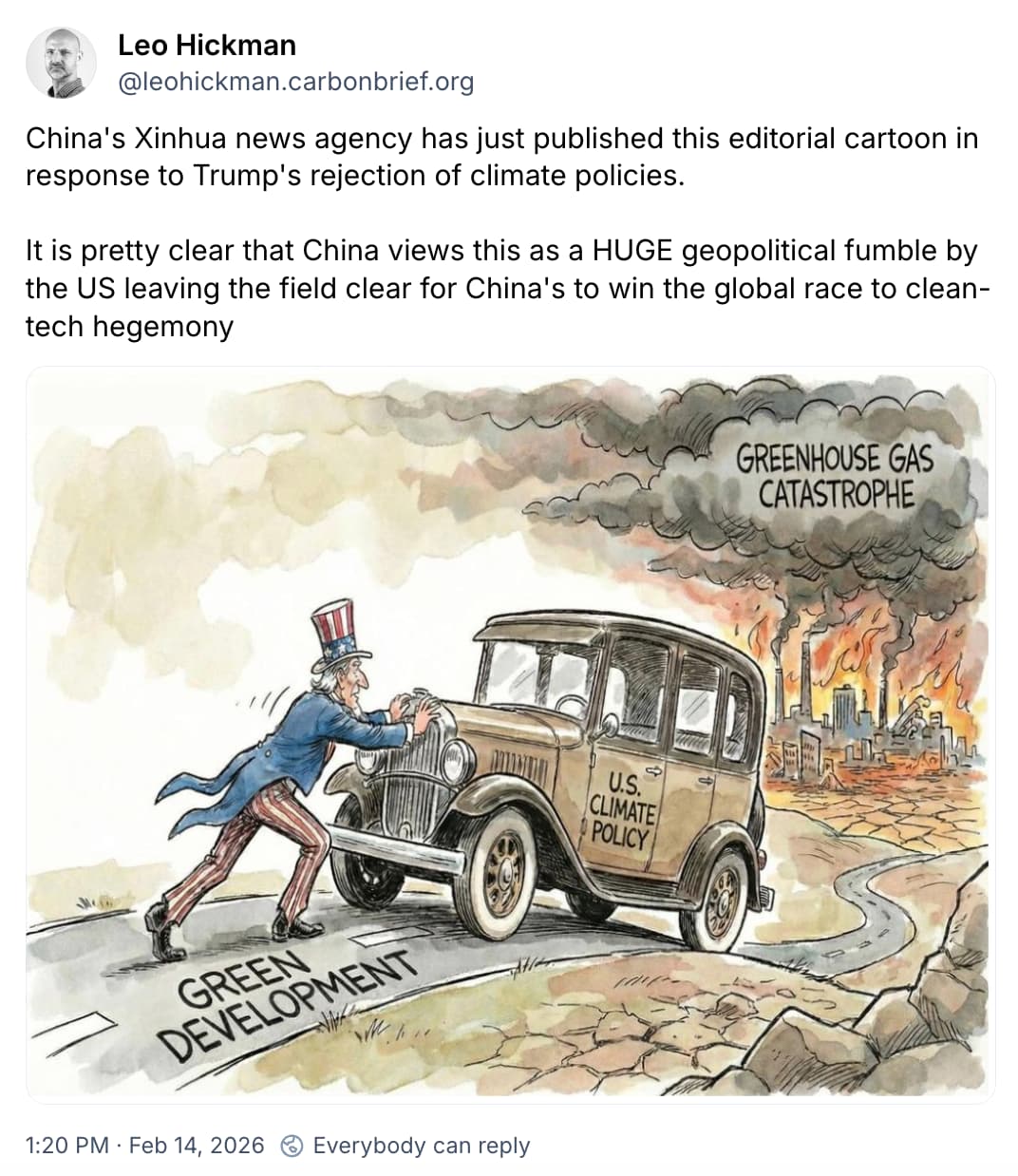

In China, state-run news agency Xinhua published a cartoon depicting Uncle Sam attempting to turn an ageing car, marked “US climate policy”, away from the road marked “green development”, back towards a city engulfed in flames and pollution that swells towards dark clouds labelled “greenhouse gas catastrophe”.

Conversely, Trump described the finding as “the legal foundation for the green new scam”, which he claimed “the Obama and Biden administration used to destroy countless jobs”.

Similarly, Al Jazeera reported that EPA administrator Zeldin said the endangerment finding “led to trillions of dollars in regulations that strangled entire sectors of the US economy, including the American auto industry”. The outlet quoted him saying:

“The Obama and Biden administrations used it to steamroll into existence a left-wing wish list of costly climate policies, electric vehicle mandates and other requirements that assaulted consumer choice and affordability.”

An editorial in the Washington Post also praises the move, saying “it’s about time” that the endangerment finding was revoked. It argued – without evidence – that the benefits of regulating emissions are “modest” and that “free-market-driven innovation has done more to combat climate change than regulatory power grabs like the ‘endangerment finding’ ever did”.

The Heritage Foundation – the climate-sceptic US lobby group that published the influential “Project 2025” document before Trump took office – has also celebrated the decision.

Time reported that the group previously criticised the endangerment finding, saying that it was used to “justify sweeping restrictions on CO2 and other greenhouse gas emissions across the economy, imposing huge costs”. The magazine added that Project 2025 laid out plans to “establish a system, with an appropriate deadline, to update the 2009 endangerment finding”.

Climate scientists have also weighed in on the administration’s repeal efforts. Prof Andrew Dessler, a climate scientist at Texas A&M University in College Station, argued that there is “no legitimate scientific rationale” for the EPA decision.

Similarly, Dr Katharine Hayhoe, chief scientist at the Nature Conservancy, said in a statement that, since the establishment of the 2009 endangerment finding, the evidence showing greenhouse gases pose a threat to human health and the environment “has only grown stronger”.

Dr Gretchen Goldman, president and CEO of the Union of Concerned Scientists and a former White House official, gave a statement, arguing that “ramming through this unlawful, destructive action at the behest of polluters is an obvious example of what happens when a corrupt administration and fossil fuel interests are allowed to run amok”.

In the San Francisco Chronicle, Prof Michael Mann, a climate scientist at the University of Pennsylvania, and Bob Ward, policy and communications director at the Grantham Research Institute, wrote that Trump is “slowing climate progress”, but that “it won’t put a stop to global climate action”. They added:

“The rest of the world is moving on and thanks to Trump’s ridiculous insistence that climate change is a ‘hoax’, the US now stands to lose out in the great economic revolution of the modern era – the clean-energy transition.”

What will the repeal mean for US emissions?

Federal regulations and standards underpinned by the endangerment finding have been at the heart of US government plans to reduce the nation’s emissions.

For example, NRDC analysis of EPA data suggests that Biden-era vehicle standards, combined with other policies to boost electric cars, were set to avoid nearly 8bn tonnes of CO2 equivalent (GtCO2e) over the next three decades.

By removing the legal requirement to regulate greenhouse gases at a federal level from such high-emitting sectors, the EPA could instead be driving higher emissions.

Nevertheless, some climate experts argue that the repeal is more of a “symbolic” action and that EPA regulations have not historically been the main drivers of US emissions cuts.

Rhodium Group analysis last year estimated the impact of the EPA removing 31 regulatory policies, including the endangerment finding and “actions that rely on that finding”. Most of these had already been proposed for repeal independently by the Trump administration.

Ben King, the organisation’s climate and energy director, tells Carbon Brief this “has the same effect on the system as repealing the endangerment finding”.

The Rhodium Group concluded that, in this scenario, emissions would continue falling to 26-35% below 2005 levels by 2035, as the chart below shows. If the regulations remained in place, it estimated that emissions would fall faster, by around 32-44%.

(Notably, neither of these scenarios would be in line with the Biden administration’s international climate pledge, which was a 61-66% reduction by 2035).

There are various factors that could contribute to continued – albeit slower – decline in US emissions, in the absence of federal regulations. These include falling costs for clean technologies, higher fossil-fuel prices and state-level legislation.

Despite Trump’s rhetoric, coal plants have become uneconomic to operate in the US compared with cheaper renewables and gas. As a result, Trump has overseen a larger reduction in coal-fired capacity than any other US president.

Meanwhile, in spite of the openly hostile policy environment, relatively low-cost US wind and solar projects are competitive with gas power and are still likely to be built in large numbers.

The vast majority of new US power capacity in recent years has been solar, wind and storage. Around 92% of power projects seeking electricity interconnection in the US are solar, wind and storage, with the remainder nearly all gas.

The broader transition to low-carbon transport is well underway in the US, with electric vehicle sales breaking records during nearly every month in 2025.

This can partly be attributed to federal tax credits, which the Trump administration is now cutting. However, cheaper models, growing consumer preference and state policies are likely to continue strengthening support.

Even if emissions continue on a downward trajectory, repealing the endangerment finding could make it harder to drive more ambitious climate action in the future. Some climate experts also point to the uncertainty of future emissions reductions.

“[It] depends on a number of technology, policy, economic and behavioural factors. Other folks are less sanguine about greenhouse gas declines,” WRI’s Widawsky tells Carbon Brief.

The post Q&A: What does Trump’s repeal of US ‘endangerment finding’ mean for climate action? appeared first on Carbon Brief.

Q&A: What does Trump’s repeal of US ‘endangerment finding’ mean for climate action?

Greenhouse Gases

DeBriefed 13 February 2026: Trump repeals landmark ‘endangerment finding’ | China’s emissions flatlining | UK’s ‘relentless rain’

Welcome to Carbon Brief’s DeBriefed.

An essential guide to the week’s key developments relating to climate change.

This week

Landmark ruling repealed

DANGER DANGER: The Trump administration formally repealed the US’s landmark “endangerment finding” this week, reported the Financial Times. The 2009 Obama-era finding concluded that greenhouse gases pose a threat to public health and has provided a legal basis for their regulation over the past two decades, said the New York Times.

RACE TO COURT: Multiple environmental groups have already threatened to sue over the administration’s decision, reported the Guardian. The fate of the ruling is likely to ultimately be decided by the Conservative-majority Supreme Court, explained the New York Times.

‘BEAUTIFUL CLEAN COAL’: Separately, Donald Trump signed an executive order requiring the Pentagon to buy coal-fired power, a move aimed to “revive a fuel source in sharp decline”, reported the Los Angeles Times. Despite his efforts,Trump has overseen more retirements of coal-fired power stations than any other US president, according to Carbon Brief analysis.

Around the world

- CLIMATE TALKS: UN climate chief Simon Stiell said in a speech on Thursday that climate action can deliver stability in the face of a “new world disorder“ while on a visit to Turkey, which will host the COP31 climate summit later this year, reported BusinessGreen.

- IBERIAN CATASTROPHE: A succession of storms that hit Spain and Portugal in recent weeks have caused millions of euros worth of damage to farmlands and required more than 11,000 people to leave their homes in Spain’s southern Andalusia region, said Reuters.

- RISKY BUSINESS: The “undervaluing” of nature by businesses is fuelling its decline and putting the global economy at risk, according to a new report by the Intergovernmental Science-Policy Platform on Biodiversity and Ecosystem Services (IPBES), covered by Carbon Brief. Carbon Brief interviewed IPBES chair Dr David Obura at the report’s launch in Manchester.

- CORAL BLEACHING: A study covered by Agence France-Presse found that more than half of the world’s coral reefs were bleached over a three-year period from 2014-17 during Earth’s third “global bleaching event”. The world has since entered a fourth bleaching event, starting in 2023, a scientist told AFP.

- ‘HELLISH HOTHOUSE EARTH’: In a commentary paper, scientists argued that the world is closer than thought to a “point of no return”, which could plunge Earth into a “hellish hothouse” state, reported the Guardian.

7.4 gigawatts

The record amount of solar, onshore wind and tidal power secured in the latest auction for new renewable capacity in the UK, reported Carbon Brief.

Latest climate research

- Human-caused climate change made the hot, dry and windy weather in Chile and Argentina three times more likely | World Weather Attribution (Carbon Brief also covered the study)

- “Early-life” exposure to extreme heat “increases risk” of neurodevelopmental delay in preschool children | Nature Climate Change

- Climate change, urbanisation and species characteristics shape European butterfly population trends | Global Ecology and Biogeography

(For more, see Carbon Brief’s in-depth daily summaries of the top climate news stories on Monday, Tuesday, Wednesday, Thursday and Friday.)

Captured

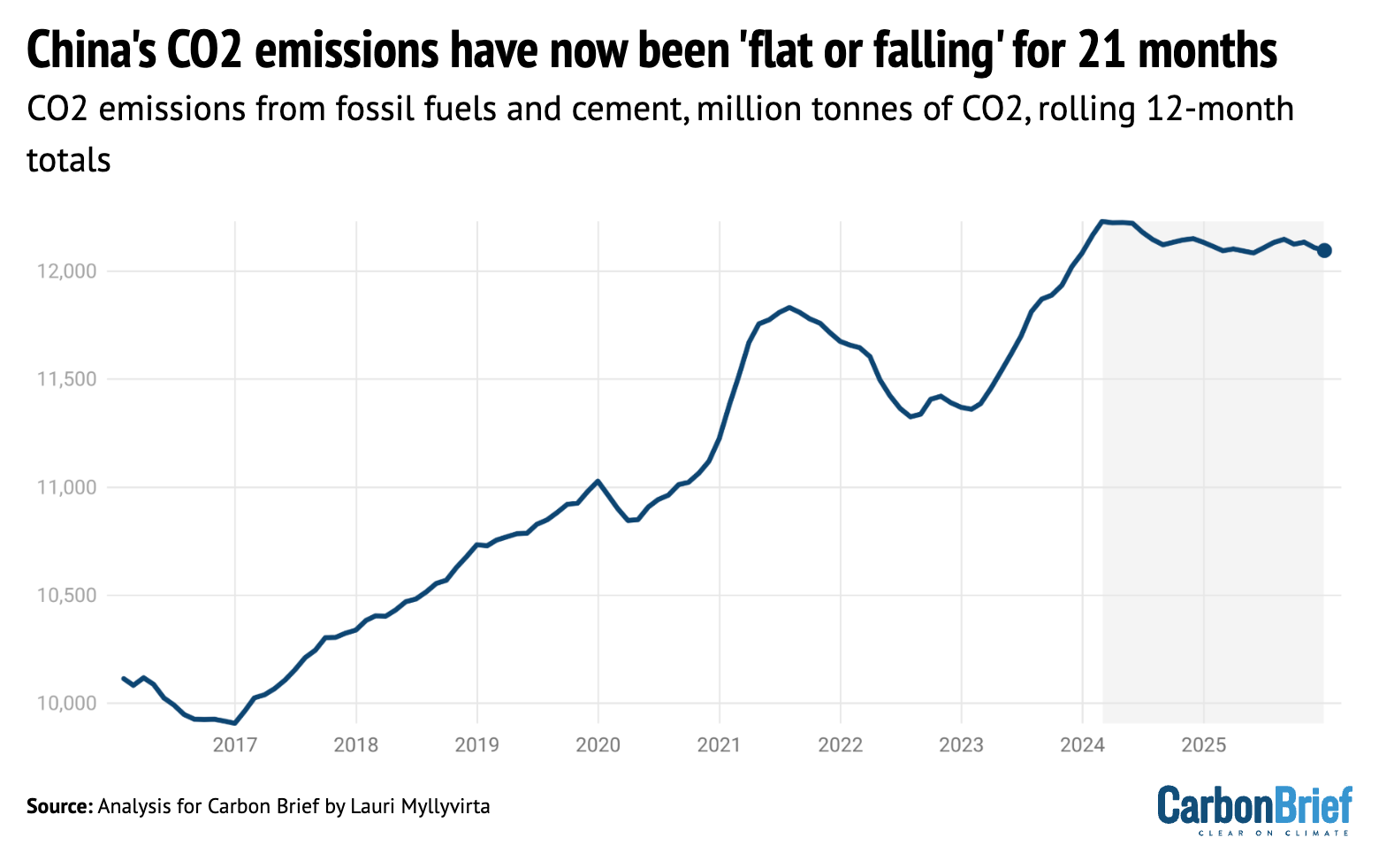

China’s carbon dioxide emissions have “now been flat or falling for 21 months”, analysis for Carbon Brief has found. The trend began in March 2024 and has lasted almost two years, due in particular to falling emissions in major sectors, including transport, power and cement, said the analysis. The analysis has been covered widely in global media, including Agence France-Presse, Bloomberg, New York Times, BBC World Service and Channel 4 News.

Spotlight

UK’s ‘relentless rain’

This week, Carbon Brief takes a deep dive into the recent relentless rain and floods in the UK and explores how they could be linked to climate change.

It is no secret that it can rain a lot in the UK. But, in some parts of the country, it has rained every day of the year so far, according to Met Office data released this week.

In total, 26 stations set new monthly rainfall records for January. Northern Ireland experienced its wettest January for 149 years and Plymouth, in the south-west of England, experienced its wettest January day in 104 years.

Areas witnessing long periods of rain included Bodmin Moor in Cornwall, which has seen 41 consecutive days of rain “and counting”, reported the Guardian. The University of Reading found that its home town had its longest period of consecutive rain – 25 days – since its records for the city began in 1908.

The relentless rainfall has caused flooding in many parts of the country, particularly in rural areas.

There were more than 200 active flood alerts in place across England and Wales at the weekend, with flood warnings clustered around Gloucester and Worcester in the West Midlands, as well as Devon and Hampshire in southern England. A flood “alert” means that there is a possibility of flooding, while a “warning” means flooding is expected.

“Growing up, the road to my school never flooded. But the school has already had to close three times this year because of flooding,” Jess Powell, a local resident of a small village in Shropshire, told Carbon Brief.

Climate link

While there has not yet been a formal analysis into the role of climate change in the UK’s current lengthy period of rain and flooding, it is known that human-caused warming can play a role in wet weather extremes, explained Dr Jess Neumann, a flooding researcher from the University of Reading. She told Carbon Brief:

“Warmer air can hold more moisture – about 7% more for every 1C of warming, increasing the chance of more frequent and at times, intense rainfall.”

The UK owes its rainy climate in large part due to the jet stream, which brings strong winds from west to east and pushes low-pressure weather systems across the Atlantic.

Scientists have said that one of the factors behind the UK’s relentless rain is the “blocking” of the jet stream, which occurs when winds slow, causing rainy weather patterns to get stuck.

The impact of climate change on the jet stream is complex, involving a lot of different factors. One theory, still subject to debate among scientists, is that Arctic warming could play a role, explained Neumann: